This research was generously funded by the Knight Foundation and Craig Newmark Philanthropies.

Executive Summary

In December 2016, shortly after the US presidential election, Facebook and five US news and fact-checking organizations—ABC News, Associated Press, FactCheck.org, PolitiFact, and Snopes—entered a partnership to combat misinformation. Motivated by a variety of concerns and values, relying on different understandings of misinformation, and with a diverse set of stakeholders in mind, they created a collaboration designed to leverage the partners’ different forms of cultural power, technological skill, and notions of public service.

Concretely, the partnership centers around managing a flow of stories that may be considered false. Here’s how it works: through a proprietary process that mixes algorithmic and human intervention, Facebook identifies candidate stories; these stories are then served to the five news and fact-checking partners through a partners-only dashboard that ranks stories according to popularity. Partners independently choose stories from the dashboard, do their usual fact-checking work, and append their fact-checks to the stories’ entries in the dashboards. Facebook uses these fact-checks to adjust whether and how it shows potentially false stories to its users.

Variously seen as a public relations stunt, a new type of collaboration, or an unavoidable coupling of organizations through circumstances beyond either’s exclusive control, the partnership emerged as a key example of platform-publisher collaboration. This report contextualizes the partnership, traces its dynamics through a series of interviews, and uses it to motivate a general set of questions that future platform press partnerships might ask themselves before collaborating.

Key findings

While the partnership is ostensibly devoted only to fact-checking, I also find it can be read as:

- tensions among multiple origin stories and motivations

- competition among varied organizational priorities

- different assumptions about access and publicness

- exertions of leverage and different types of power

- establishment of categories and standards

- negotiations among different types of scale

- competing rhythms and timelines of action

- experiments in automation and “practice capture”

- varied understandings of impact and success

- ongoing and unevenly distributed management of change

Taken together, these dynamics suggest a more general model of partnership between news organizations and technology companies—an image of what platform-press collaboration looks like and how it is negotiated.

I must emphasize that although it is the study’s central backdrop, this project is not about “fake news.” It is about the values and tensions underlying partnerships between news organizations and technology companies.

Beyond the particular example I focus on in this report, such partnerships have often existed, are likely to continue, and now influence many of the conditions under which journalists work, the way news circulates, and how audiences interpret stories. The press’s public accountability, technologists’ responsibilities, and journalists’ ethics will increasingly emerge not from any single organization or professional tradition; rather they will be shaped through partnerships that, explicitly and tacitly, signal which metrics of success, forms of expertise, types of power, and standards of quality are expected and to be encouraged.

Most broadly, and as a set of concluding recommendations details, such partnerships between news organizations and technology companies need to grapple with their public obligations. Publishers and platforms may see themselves as strictly private entities with the freedom to join or leave partnerships, define for themselves their public responsibilities, and make independent judgments about what is best for their users and readers. But when they meet and occupy such central positions in media systems, they create a new type of power that needs a new kind of scrutiny. An almost unassailable, opaque, and proprietarily guarded collective ability to create and circulate news, and to signal to audiences what can believed—this kind of power cannot live within any single set of self-selected, self-regulating organizations that escapes robust public scrutiny.

Introduction

The partnership between Facebook and news and fact-checking organizations—hereafter simply referred to as “the partnership”—originally emerged from, and continues to exist amidst, a complex set of cultural and technological forces that go well beyond the bounds or control of any one of its members. Though focused on a particular practice and relatively small set of organizations, the partnership does not exist in isolation, and its significance and dynamics cannot easily be bracketed.

Before discussing the interviews I conducted with partnership members, I want to place the partnership in context, briefly reviewing forces that, although not always explicitly discussed by interviewees, create the partnership’s sociotechnical backdrop.

History and definitions of ‘fake news’

Fake news is nothing new. Writing in Harper’s Magazine in 1925, in an era when modern rituals of journalistic professionalism were just emerging, journalism schools were still new, and the press was struggling to distinguish itself from public relations agents, Associated Press editor Edward McKernon worried that what “makes the problem of distributing accurate news all the more difficult is the number of people—a number far greater than most readers realize—who are intent on misinforming the public for their own ends. The news editor has to contend not only with rumor, but with the market rigger, the news faker, the promoter of questionable projects, and some of our best citizens obsessed with a single idea.”1

McKernon’s worry back then was not even new. From the seventeenth through nineteenth centuries, partisan pamphleteers, coureurs (singers who ran among towns spread falsities through song), artists and photographers, in both Europe and the United States, capitalized on relatively low literacy rates, changes in media technologies, and seemingly insatiable public appetites for sensationalism to deceive audiences across a variety of media.

Writers and journalists in each era struggled with how to make claims about the realities of their worlds, how to defend themselves against charges of deception, how to create words and imagery that would resist misinterpretation and manipulation, how to hold powerful people accountable through irrefutable facts,2 3 4 5 6 and how to be both fact-makers and fact-checkers with political power.7 And media scholars watching the emergence of the early world wide web echoed these concerns, observing that online “facts more easily escape from their creator’s or owner’s control and, once unleashed, can be bandied about.”8

Surveying a vast amount of contemporary literature on how facts are made and checked in online environments, RAND researchers describe a general phenomenon they call “truth decay,” with four dimensions:

- “an increasing disagreement about facts and analytical interpretations of facts and data;

- a blurring of the line between opinion and fact;

- an increase in the relative volume, and resulting influence, of opinion and personal experience over fact;

- and lowered trust in formerly respected sources of factual information.“9

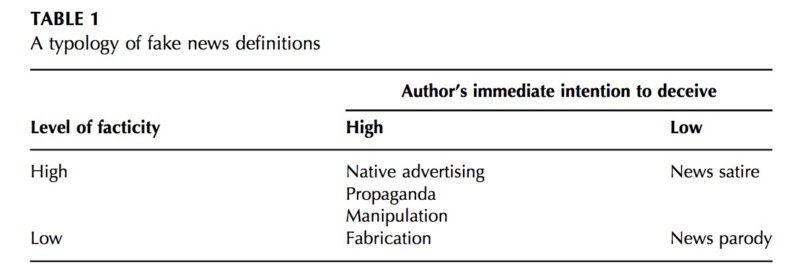

Implicit in this model of today and each era’s debates over the creation and circulation of facts are discussions about what exactly it meant for a piece of information to be fake or untrue. Summarizing scholarship from a variety of fields, Caroline Jack offers the following typology, giving precision to contemporary debates about “fake news”:

- Misinformation: “information whose inaccuracy is unintentional”

- Disinformation: “information that is deliberately false or misleading”

- White vs. gray vs. black propaganda: “systematic information campaigns that are deliberately manipulative or deceptive” and that vary in the degree to which sources are known and named

- Gaslighting: deceptions that stop victims from “trusting their own judgments and perceptions”

- Dezinformatsiya: a Soviet phenomenon in which the state disseminates “false or misleading information to the media in targeted countries or regions”

- Xuanchuan: a Chinese term used to describe flooding online “conversational spaces with positive messages or attempts to change the subject”

- Satire, parody, hoax, and culture jamming: the use of misinformation for cultural critique and commentary10

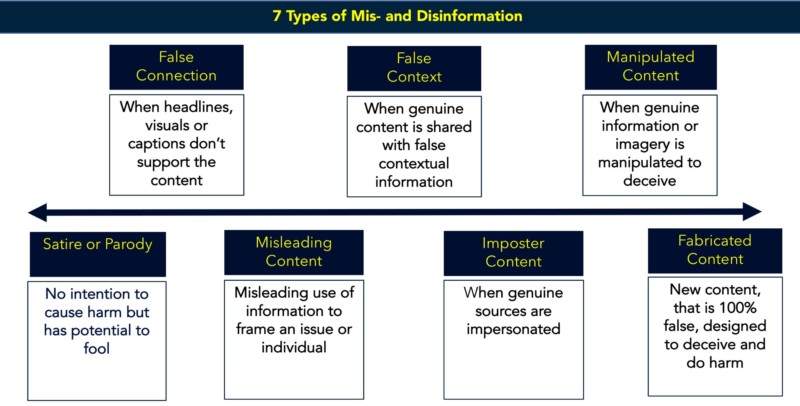

In a complementary model, Claire Wardle similarly argues against using the term “fake news,”11 not only because it has become a cliché and convenient way for politicians (often President Trump) to dismiss information they dislike, but because it confuses contexts, meanings, and intentions that need to be named and distinguished if online media systems are to be places for high-quality communication and collective self-government. She rightly renames “fake news” as a space of discourse and actors, populated by different types of information, and by a variety of motivations (see Figure 1).

Figure 1: Types of mis- and disinformation, mapped to motivations. Taken from Wardle (2017).

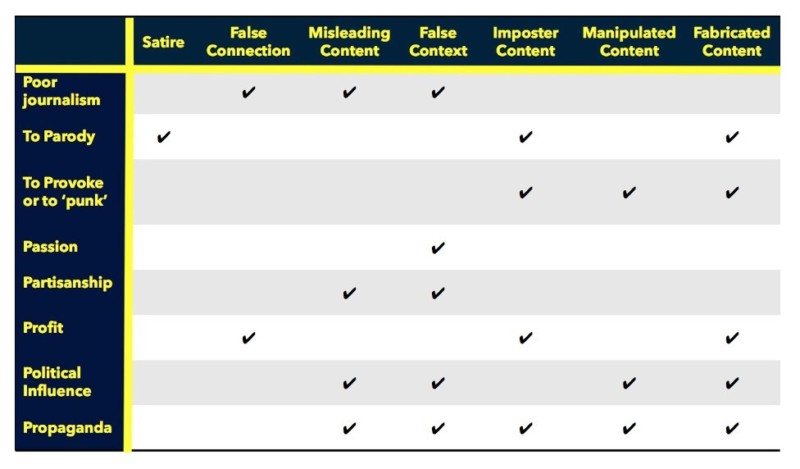

Focused on how the term “fake news” appears within news and news advertising, and how journalists seem to understand the concept, Edson Tandoc, Zheng Wei Lim, and Richard Ling similarly find that the term is best understood as an interplay between types of media representations—how claims to the truth or attempts to deceive appear in words or imagery—and an author’s intentions, creating a map of contemporary news genres implicated by misinformation (see Figure 2):12

Figure 2: A typology of fake news definitions. Taken from Tandoc et al. (2017).

My aim is not to adopt any one of these typologies or argue their relative merits. Rather, it is to highlight that the partnership exists within a longstanding history and complex space of definitions of fact-checking.

Institutionalized fact-checking

These histories and debates have relatively recently crystalized into patterns of action performed by a distributed and increasingly professionalized community of fact-checkers, organized, for example, through the International Fact-Checking Network.13 As Graves chronicles in his foundational study of both the history of US fact-checking and its emergence as a subfield of political journalism, fact-checkers “see themselves as a distinct professional cohort—a self-described fact-checking movement within journalism” with members in major broadcasting networks, online newsrooms, and papers big and small.14 Often collaborating with each other, fact-checkers

practice journalism in the networked mode. They link promiscuously to outside news sources, encourage other reporters to cite their work, and strike distribution deals with major media organizations. Fact-checkers have achieved a high profile in elite media-political networks.15

Researchers further tell us that fact-checking emerges when governance and communications institutions are perceived to be weak.16 They have uncovered variations in journalists’ fact-checking and verification practices—e.g., some focus on confirming more easily verifiable information like names and numbers over more complex causal claims; some routinely pass on unverified and unattributed information assuming that a colleague will do the fact-check; and others create a closed, circular process in which verification only refers to a journalist’s earlier work.17

Additionally, journalists are often skeptical about whether certain kinds of facts and fact-checking practices will be seen as professionally acceptable—when thinking about if or how to fact-check, they think about their owners’ and publishers’ missions, and how their colleagues will perceive verification.18 19 And while they are often ambivalent about the utility of fact-checking tools and services,20 they largely express a desire to fact-check, seeing it not just as a professionally responsible move, but also as a way to distinguish themselves from lower-status reporters, signaling their affiliation with an elite and prestigious world of verified truth-telling.21

Beyond fact-checking within stories, especially in online contexts, there are active debates about whether and how to refute false information that has already been published. There is uncertainty surrounding whether post-hoc fact-checks change audiences’ beliefs or actions22 and whether labeling an article as disputed and showing audiences related information makes people more or less willing to change their beliefs.23 24 Such dynamics are also wrapped up in audiences’ feelings about the media as a whole and how they perceive themselves: some of the willingness to accept corrections depends upon how much people trust the media.25 Some people share news that they know to be misleading, false, and or confusing26 because their relationship to news is not defined by rationally processing claims and counter-claims, but on deciding how well information and narratives align with their self-identities, affiliations, and desires.27 Trafficking in false news is not about logical exchange of claims and counter-claims, it is about who people see themselves as, who they believe they are or want to be.

A complete review of this large and fast-changing literature is beyond the scope of this report, but the general point stands: fact-checking is its own subfield of journalism with a diversity of goals, values, and practices; journalists themselves are sometimes unclear about what role fact-checking can or should play in their work; and, especially in online environments, there is active debate about how and when fact-checking should engage audiences. A partnership designed at the intersection of institutionalized fact-checking and the world’s largest social media platform cannot avoid these dynamics.

A networked, liminal, platform press

These histories of misinformation, debates about its power and remedies, and a diverse institutional field of fact-checkers are complicated by a lack of agreement over what “the press” means anymore. It can be difficult to argue precisely that fact-checkers are a subfield of journalism, when the press—the institutional forces that shape how journalists work, information circulates, and audiences interpret news—is currently populated by so many different characters. This new entity is variously called the “networked press,”28 the “liminal press,”29 “online distribution networks,”30 the “hybrid media system,”31 and the “platform press.”32

There is increasing recognition that the conditions under which journalists work, news is produced, and information circulates are defined not only by journalists, their sources, markets, and the state, but also by technologists, software designers, databases, and algorithms that influence how news is made and made meaningful, through both human judgment and computational processes.

Additionally, news organizations are entering into partnerships among themselves.33 For example:

- Panama Papers consortium34

- ProPublica’s numerous partnerships with other news organizations and collaboration with audiences on everything from fighting hate speech to explaining election processes35

- technology-focused partnerships36 like Facebook’s Instant Articles, SnapChat’s Discover, Google’s Accelerated Mobile Pages, Twitter’s Amplify, and Amazon’s discounted access for subscribers to The Washington Post

- Tech and Check Cooperative’s project to bring together universities, the Internet Archive, and Google for automated, real-time fact-checking on the US State of the Union address37

- First Draft’s CrossCheck Newsroom project that convenes technology companies (Google and Facebook are partners), international news organizations, and online metrics and analytics companies to fight the circulation of misinformation38

There is a seemingly endless set of strategic, experimental relationships between platforms and publishers that can make it difficult to tell where media publishers end and technology companies start. Fact-checking is quickly expanding beyond a relatively small set of news organizations to include new actors who do not necessarily share journalistic training or practices.

Sometimes, though, these distinctions are easier to see. For example, though it has repeatedly stated its support for the news industry and entered partnerships with various publishers and media researchers, Facebook has earned the ire of journalists and publishers around the world when it has unilaterally changed how news appears on its platform.

In October 2017, in six countries, it moved the “non-promoted posts” (i.e., unpaid) that many news organizations used to distribute content on the platform out of the main Facebook newsfeed. Many publications—especially small and non-elite publishers which relied heavily on Facebook distribution—reported immediate drops of sixty to eighty percent in Facebook engagement with its stories.39 In March 2018, Facebook announced that it was ending the experiment and “acknowledged the criticism that [it] had acted high-handedly by completely changing the media landscape for six countries without warning or input from stakeholders.”40

In January 2018, in an attempt to help users “have more meaningful social interactions,”41 Facebook announced that it would, among other changes, show people fewer news articles, marketing messages, and advertisements42—grouping these types of content as inconsistent with the company’s mission to increase people’s “happiness and health.”43 Later that same month, the company announced a two-question survey it designed to help it determine the quality and trustworthiness of news organizations by aggregating and analyzing users’ responses, presumably using the data to algorithmically rank news content.

And most recently, Zuckerberg refined Facebook’s earlier newsfeed revision deprioritizing news to say that the company would “promote news from local sources.”44 In each move, although the company often acknowledges the need for transparency, promises to give more notice, and engage better consultation, it also signals that, despite partnerships with news organizations, it retains solo control over how news circulation on its platform will be judged relevant, meaningful, and trustworthy—and that it will update judgments as it determines.

These tensions—between how publishers and platforms understand news content—play out amidst larger conversations about the power and responsibilities of platforms writ large. Although platforms like Facebook and Google give people ways to export their data, they effectively act as a duopoly: it is practically impossible to avoid the platforms and be total non-users.45 They control approximately sixty to seventy percent of US advertising market share,46 and it is virtually impossible for new social network sites to emerge and compete.47

Having achieved this awesome power, platform companies are constantly negotiating. Insisting that they are technology makers and not media organizations, they resist calls that they be regulated like quasi utilities and held accountable to public interest standards. Yet to remain consistent with the message they send to advertisers and investors that they are powerful, effective, and efficient controllers of public attention, they must acknowledge their power and attempt a kind of self-regulation that is variously seen as a sincere attempt at accepting media-like responsibility or a strategic delaying tactic meant to ward off formal oversight.48 49 50

And while simultaneously creating and struggling under the complexities of such global scale, platforms filter users through proprietary content moderation rules—privately maintained policies designed and implemented to reflect the platform’s terms of service and its definitions of community standards, not necessarily openly debated public interests or journalistic values.51 52 They effectively carve out for themselves a new kind of sovereign capitalism by creating massive, opaquely maintained rules that are almost impossible for people to avoid, understand, or hold accountable through existing models of governance.53 Appreciating that transparency alone is often insufficient for public accountability,54 media researchers are often stuck calling for algorithmic transparency,55 56 trying to access and understand platform data,57 designing ways of auditing platforms without having to ask permission,58 and pushing for regulatory reforms that would let them do so legally.59

Platform-publisher relationships are not always innocuous or easily fixed. For example, in 2017 Facebook was heavily criticized as the platform was being used to spread misinformation across Myanmar (a country where the number of Facebook users exploded from two million in 2014 to thirty million in 2017). Media researchers and aid groups watched as Burmese government officials and others used the platform to spread propaganda and disinformation. Human rights groups said that the government took advantage of Facebook’s ubiquity, algorithmic priorities, scant appreciation of local language and culture, and disempowered opposition groups to organize and accelerate ethnic cleansing and genocide of the Rohingya minority.60

Meanwhile, regulatory attempts are not always straightforward or desirable. While Section 230 of the Communications Decency Act gives platforms considerable freedom to moderate content according to internal company policies in the United States, the new German “NetzDG” law—designed to minimize the online circulation of false and harmful speech—gives platforms twenty-four hours to remove “obviously illegal” content before facing fines. Critics charge that the law will backfire, incentivizing platforms to make broad and sweeping determinations about speech and err on the side of censorship, ultimately working against public oversight by leaving questions “that require legal expertise. . .delegated to tech companies.”61

Though not always narrowly focused on fact-checking, these partnerships, content commissions, algorithmic changes, and community surveys point to uneven relationships between social media platforms—Facebook in particular—and news publishers. Together they determine conditions under which news is produced, distributed, and made meaningful, creating the broad social and technological forces within which fact-checking operates. They do not always do so equitably. The initial power to surface and showcase verified, fact-checked news online often lies with platform policies and algorithms that direct information exposure but resist close scrutiny and oversight—as does the power to intervene to correct misinformation and counter disinformation after the fact.

Technological politics and the 2016 US presidential election

One of the most powerful motivations for the partnership is the increasingly technological nature of politics and the 2016 US presidential election. Chronicled by media scholars across multiple elections and contexts,62 63 64 65 66 it is increasingly difficult to talk about technology companies and electoral politics as empirically separate phenomena.

Personnel from technology companies embed themselves within electoral campaigns; platforms sponsor electoral events and customize their user experiences for debates, conventions, and elections; and political parties spend considerable resources building technological infrastructures designed to mimic and leverage social media platforms and online advertising systems.67 Fact-checking that tries to counter partisan mis- and disinformation must contend not only with traditional campaign machinery, but political operations that are often intertwined with the very same technologies and practices that fact-checkers are trying to use to spread counter-partisan narratives.

It is beyond the scope of this report to analyze the large body of literature about the use of social media and disinformation campaigns by partisan actors during the 2016 presidential election campaign, but that protracted event is one of the most identifiable influences on the fact-checking partnership. There is no shortage of debate about what types of misleading and false information circulated during the campaign68 69 70 and whether such material impacted the election outcome.71 In Congressional hearings, without making specific commitments to changing practices, platform company executives apologized for the role their companies played in helping to spread false and misleading electoral content.72 Since the election, some researchers have additionally critiqued the press’s own susceptibility to fake news, arguing that journalists let themselves be led by partisan sources of misinformation and, ultimately, were the one who amplified misleading and false stories.73 74

The 2016 election highlighted a tension amongst journalists. They were often caught trying to figure out how to call out and contextualize the lies and misleading statements of office holders,75 while simultaneously having to defend their own work against politicians and fellow media producers who called fact-checks or undesirable stories “fake news.”76

Shortly after the election, the term “fake news” seemed to quickly lose what little meaning it ever had, with popular and alternative media spaces filled with dramatic charges that journalists and politicians were both lying, that we had entered a post-truth era that would cripple democracy, and that populism and participatory media had overreached and dramatically wounded the very idea of electoral governance, perhaps irreversibly so. (While standing by his reporting, one of the earliest and most prolific journalists covering platforms and electoral “fake news” went on to regret the term’s popularity and over-application, arguing that the governance and electoral problems created by a misinforming media system run deeper than any single phenomenon or platform practice.)77

For fact-checkers, the election both proved their essential democratic role and uncovered new challenges about how to operate at such large scale, in real-time, for partisan audiences that seem to process claims and counter-claims differently. For example, researchers found that, between October 7 and November 14, 2016, although disinformation mostly reached Trump supporters (who heavily consumed conservative media where disinformation was more likely to live), Facebook was a “key vector of exposure to fake news and. . .fact-checks of fake news almost never reached its consumers.”78 This led the Director of the International Fact-Checking Network Alexios Mantzarlis to conclude that fake news had “a relatively large audience, but it went deep with only a small portion of Americans. Fact-checkers also [drew] large audiences, but [didn’t] seem to bring the corrections to those who most need[ed] to read them.”79

Whether or not “fake news” had a consequential impact on the 2016 presidential election, it was a watershed moment for intersections between technology and politics, journalists’ and fact-checkers’ relationships to verification and accountability reporting, and popular understandings of political truth.

Technological innovation and automation

The partnership exists as innovations are beginning to surface that threaten the delicate social, institutional, and technological balances already struck to fight mis- and disinformation.

New video and audio manipulation technologies are quickly emerging that make it possible to produce realistic audio-video impersonations, making public figures with large amounts of publicly available training data particularly susceptible to being impersonated by fabricated sound and imagery.80 Such technologies have already been used to forge videos of criminal behavior that never happened81 and speeches that President Obama never gave.82 With sufficiently rich training data, rule systems, and computational rendering, such technologies become forms of artificial intelligence that can quickly create believable impersonations.

Such creations can increasingly be disseminated at previously unseen computational speeds. Estimates vary, but research suggests that more than half of all internet traffic is produced by automated programs83 and that vast secondary industries exist to automatically impersonate social media accounts.84 Between September 1 and November 16, 2016, Russian bots retweeted Trump 470,000 times (50,000 for Clinton).85 Meanwhile, political processes in many countries are routinely influenced by “computational propaganda” systems that use a mix of automation and media manipulation to algorithmically spread disinformation and sew political confusion.86

This computational turn is being used by new associations of “trolls, white nationalists, men’s rights activists, gamergaters, the ‘alt-right,’ and conspiracy theorists” who employ social media platforms, memes, and bots to hijack media ecosystems and deceive journalists into adopting their narratives.87 Such groups are not new to media systems, but are showing increasing facility with disinformation tools and techniques that give them a new kind of largely unchecked power.

Finally, we are beginning to see the emergence of new grassroots, community-organized initiatives designed to thwart the local power of disinformation88 and crowdsource design communities and solutions to computational disinformation.89

I chronicle these new and upcoming forces not because any one of them represents the single most important threat or solution to mis- and disinformation, but because they represent a new set of technological and social forces that will likely continue to shape and challenge partnerships between technology companies and fact-checking organizations.

Method

My goal was to understand the partnership—among Facebook, PolitiFact, FactCheck.org, Snopes, ABC News, and the Associated Press—as a whole, not necessarily focusing on any one member. Among these six organizations, between August 2017 and January 2018, I conducted a mix of attributed and on-the-record, background, and off-the-record interviews with six people, representing four of the partners. I additionally spoke with two people outside of the partner organizations who have close knowledge of the partnership’s members, organizations, and processes. Each of the eight interviews lasted between twenty and ninety minutes, with several supplemented by follow-up email exchanges.

One partner organization could not be reached and another refused repeated interview requests, directing me instead to the organization’s public relations statements. I supplemented these interviews with analyses of publicly available documents, popular and trade-press accounts, and secondary scholarly accounts of organizations’ actions in other contexts.

I do not attribute any quotes within this report to individuals or their organizations. Even though some interviewees agreed to speak on the record, several would only speak with me anonymously—requesting that I not identify them or their organization, or quote directly from our interviews. My concern is that revealing the names of people or organizations who agreed to be named may, through a relatively simple process of elimination within a small group of people who know each other well, inadvertently reveal the identities or affiliations of those I agreed to grant anonymity. I understand that by not attributing anyone’s quotes I am perhaps not meeting the expectations of those who wanted to be named, but my conclusion was that a desire for attribution risks harming the need for anonymity, especially in such a small group.

I also made the decision not to attribute any quotes out of a desire to focus on the partnership as a whole and not any particular organization, as well as to demonstrate how researchers might create network-level interview-based accounts of organizational partnerships. Pointedly, if researchers can create intellectually insightful and professionally useful accounts of organizational partnerships without revealing identities, then perhaps employers at both technology companies and news organizations—who have formally or tacitly told their employees not to communicate with researchers or journalists at all or only under unrealistically restrictive non-disclosure legal agreements—might realize that there are constructive and minimally risky ways of publicly talking about proprietary processes. Of course, I understand the limitations of making the partnership my primary object of study and level of analysis, and of granting broad anonymity: it may be harder for readers to contextualize the interview data, scrutinize my interpretations and recommendations, or use this report to help hold accountable individual people or organizations.i

Partnership Dynamics

The partnership essentially involves Facebook and its news and fact-checking partners collaborating to manage a flow of stories that may be considered false. Through a proprietary process that mixes algorithmic and human intervention, Facebook identifies candidate stories; these stories are served to the five news and fact-checking partners through a partners-only dashboard that ranks stories according to popularity; partners independently choose stories from the dashboard, do their usual fact-checking work, and then append their fact-checks to the stories’ entries in the dashboards. Facebook uses these fact-checks to adjust whether and how it shows potentially false stories to its users.

Most broadly, Facebook and its partners have created a fact-checking infrastructure. By infrastructure I mean a set of social and technological relationships that is:

- embedded within “other structures, social arrangements and technologies,”90 (e.g., the partnership is integrated with Facebook’s existing architectures and fact-checkers’ ways of working)

- transparent to use without requiring reinvention or assembly for each task (e.g., the partnership and dashboard are largely stable and self-sustaining)

- scoped beyond a single event or site of practice (e.g., the dashboard is not customized for partners or used) idiosyncratically

- learned as a member of a group that agrees on its taken-for-grantedness (e.g., the dashboard is most often just used without critique, not debated or interrogated on every use)

- shaping and shaped by conventions within communities of practice (e.g., people at individual fact-checking organizations decide how the dashboard is used and how stories are selected)

- embodied in standards that define the nature of acceptable use and replication (e.g., the dashboard and partners signal which stories are more or less likely to be fact-checked)

- built on an installed base of other infrastructures with similar characteristics (e.g., the partnership relies on established assumptions and traditions like the idea of popularity on social media or what defines a “good” fact-check)

- visible only when it breaks down91 ii—e.g., the dashboard and partnership primarily receive attention from partners or from the general public when story popularities seem nonsensical or when “fake news” is perceived as powerful.

This infrastructural way of thinking helps to show not only partners’ different social and cultural assumptions, but also how standards, practices, values, and disagreements appear in the design, meaning, and use of technologies. Aligned with Nielsen and Ganter,92 my aim was to follow both the partnership’s operational dynamics—tracing its practices, functions, metrics, memberships—show how its strategic priorities appeared in the infrastructure that encoded its assumptions, goals, and metrics of success. The dashboard and workflows were the primary coordinating infrastructures.

Partners had little operational contact with each other. Except for one meeting held when the group was under public scrutiny and partners wanted to discuss the collaboration, no one mentioned any regular meetings, shared best practices, or discussed a collective voice.

The partnership’s dynamics play out in ten dimensions, each discussed below. While these dynamics are conceptually distinct, they are not mutually exclusive and inevitably overlap and intertwine with each other. Although some of their details may have changed since writing this report, the categories seem stable and empirically well supported enough to show the partnership’s workings.

Origin stories

I heard no single origin story shared among all partners, but rather a set of events and forces, which appeared more or less prominently in different narratives that motivated the partnership’s establishment. In describing why the partnership was created in the first place, partners variously foregrounded:

- the 2016 US presidential election and widespread public perception that “fake news” had led to the Trump presidency;

- fact-checkers’ desires to scale and speed up work they had already been doing for months after independently observing misinformation upticks;

- Facebook employees’ feelings that the company was somehow—in a way it did not entirely understand or did not admit publicly—at least partially responsible for the propagation of election misinformation, and that a coordinated and committed response was required that would both protect the platform against bad actors and encourage the propagation of content consistent with its community standards;

- the need to continue and refine Facebook-initiated outreaches to news and fact-checking organizations, as a way of more publicly iterating on and deploying initiatives the company had been working on internally for months;

- perception among some partners that Facebook’s senior executives needed a quick and high-profile announcement to show that they had heard the public’s discontent and that they would make amends, a move that some partners saw as primarily a public relations tactic and a way of avoiding formal regulation;

- a recognition by some partners that since much of their traffic came from Facebook, they needed to be “in the room” where decisions were being made about the platform’s relationship to fact-checking organizations, even if they did not fully believe that there was a real, urgent, and tractable misinformation problem that Facebook needed to solve; and

- a public letter from the International Fact-Checking Network (IFCN) calling on Facebook to accept responsibility for its role in misinformation ecosystems, and work with news and fact-checking organizations who had agreed to follow a “code of principles”93

Although all partners consistently mentioned the IFCN letter, I heard partners across all my interviews emphasize that the partnership was consistent with their existing work, saying that news organizations had always been concerned with truthful reporting, and Facebook had always been concerned about the “quality and integrity” of its site. News organizations often saw their relationships with Facebook as contentious and involving disingenuous public relations strategies, and the International Fact Checking Network had long been trying to organize, professionalize, and increase the impact of fact-checking. While the election may have sparked the partnership’s formation, the partnership emerged from long-standing institutional dynamics.

In December 2016, the partnership was publicly announced. Facebook independently began working on a “dashboard” that appeared in almost all of my interviews as a central piece of shared technological infrastructure. The news and fact-checking partners I spoke with said they had virtually no input into the creation or design of this dashboard and were skeptical that Facebook alone had the capability to independently design a solution to such a complex, multifaceted problem. After the initial partnership was announced, Facebook product designers and engineers created the dashboard and then gave partners access to it.

Although no partner would provide me access to the dashboard or show me screenshots of it, all described it and the associated workflow similarly. More about this dashboard will appear later in this report in sections about partnership dynamics, but briefly: each partner received the same access to a central, shared repository of candidate “fake news” stories. Facebook populated the dashboard with “fake news” stories, without coordinating with each partner independently around story selection from the dashboard. After choosing articles at will to fact-check, partners appended to the dashboard’s entry a link to the URL with their fact-check.

Priorities

Closely related to this diverse collection of origin stories, I heard a competing set of partnership priorities. Several news and fact-checking partners stressed that they were effectively public servants—that their job was to “empower the people who are being talked over,” “reduce deception in politics,” and follow an “example-driven” process that would reduce both the prevalence and impact of “fake news.” I heard desires to understand the “provenance” of fake news, to “empirically examine the worst of the worst of the election,” and create solutions that would prevent such a failure from ever happening again.

Several partners also stressed that the work they do is for the public, and that since Facebook is one of the major ways of reaching audiences, they want to work with as many media partners as possible, including Facebook. One fact-checking partner said, “Our policy is: steal our stuff. Please.”

I also heard skepticism about Facebook’s priorities, especially that the platform was using news and fact-checking organizations as “cheap and effective PR [public relations]” and that this was another step in its experiment-driven culture. “It’s been tinkering with the democratic process for years. . .it’s ugly,” one person told me. Pointedly, another partner described participating in the partnership as a kind of ethical duty. Even though this person doubted the partnership’s value, news and fact-checking organizations need to engage with Facebook because, they said, it was “privileging dark ads” and creating a platform that has “contributed to genocides, slave auctioning, and migrant violence.” This interviewee sees Facebook as a media company and a public utility, and viewed participation in the partnership as one way to hold the company accountable.

Beyond broad ideological positions, different priorities also played out in the design and use of the dashboard. Described as a way to get fake news “out of Facebook” to intermediary fact-checkers, and then “back into Facebook,” the dashboard largely reflects Facebook’s priorities, according to participants, not those of news and fact-checking organizations. One partner described the goal as “keeping people on Facebook, to make sure they look to the platform for context.” Another partner said that Facebook “provides links to stories that have been flagged by users, or maybe algorithms, I don’t know” and then we can “rank those stories by popularity.” They continued, “We’ve asked them a hundred ways to Sunday what popularity means. We don’t know the mechanism they use to determined popularity.”

Even though this partner did not know how Facebook determined story popularity, they shared Facebook’s priority of focusing on popularity: “You don’t want to write about something that hasn’t gone viral because you don’t want to elevate its visibility. But if there’s something that is being widely circulated, then you want to debunk it.” Partners did not only align themselves with Facebook through the platform’s popularity metric—they also described choosing stories because they fulfilled their individual organizational mandates or because their readers had written to ask about them. Still, there were moments when some partners seemed to acknowledge that they had little choice but to align themselves with the dashboard’s priorities: accepting whatever drove story selection, those stories’ rankings, and the dashboard’s once-per-day refresh rate.

Sometimes priorities conflicted, or at least diverged. One participant described deep skepticism about the dashboard’s “popularity” metric, saying they suspected (but could not prove)that stories with high advertising revenue potential would never appear on that list because “sometimes fake news can make money.” Facebook, they suspected, would not want to fact-checkers to debunk high-earning stories. Regardless of whether this is true, this skepticism paints the dashboard as a place where clashing priorities, mistrust, or misaligned values can surface through seemingly innocuous design and engineering choices. It also distinguishes between fact-checkers motivated by public service and a perception among some partners that Facebook is primarily an advertising company that ultimately prioritizes revenue generation over public values.

Related to the dashboard design, which one partner described as “very word based,”almost all of the partners said the system needed to process memes, images, and video content far more effectively. One partner said, “We should be doing work on memes. The partnership doesn’t address memes, just stories. We’ve had these conversations with Facebook; it’s something they say they want to do but haven’t done it.”

Echoing another partner’s concern over the company’s financial stakes in misinformation, one partner said they would like to see the partnership address advertising content that circulates on the platform to give partners an opportunity to debunk harmful, paid, partisan content. Finally, several partners questioned whether Facebook’s priorities may have become embedded in the dashboard design because of types of sources and stories they are not seeing listed. “We don’t see mainstream media appearing [in the dashboard]—is it being filtered out? Is it not happening” and “we aren’t seeing major conspiracy theories or conservative media—no InfoWars on the list, that’s a surprise.”

The partnership’s priorities thus play out in two places: in partners’ stated motivations and values (why partners say they want to participate) and in the dashboard’s design and use (the conditions of partnership participation that largely reflect Facebook’s priorities). The dashboard’s emergence from a largely solo, Facebook-driven design process means that it is difficult for partners to negotiate the terms of their participation on an operational level, to ensure that their priorities appear in the partnership’s shared technological infrastructure.

Access and publicness

I consistently heard most partners say they wanted more public knowledge about and within the partnership, transparency about its workings, and critique from outside the partnership that could force change within it. One partner asked that Facebook be “more forthright and willing” to talk about details of the partnership and its design, with partners and with the general public. This same partner called on critics and academics to focus on the partnership, to make it a central object of concern, and publicly pressure Facebook to allow members to talk publicly. Another hoped that my report might help to force Facebook to “provide info about how effective this is,” saying, “It would be great if they put out a report. We’ve been doing this for a year. They’re in the best position to know how effective it is. We need more transparency.”

When asked about the effectiveness of the partnership, another person said, “I can’t suggest [how] to improve something I can’t evaluate. But we are probably seeing the largest real-life experiment in countering misinformation in history and know very little of how it’s going. That’s my number one concern. Not because I think Facebook is hiding anything, but because I think we could all learn loads from it.” Many partners I spoke with expressed interest in this report because they suspected I might discover things about the partnership that they themselves had been unable to learn.

Most people required, as a condition of their participation in this study, that I not name them or their organizations. They stressed the need for anonymity and discretion not because they or their organizations wanted the partnership to be secretive, but because Facebook banned them from speaking about it. By speaking with me, even off the record, several of them were taking personal and organizational risks. They did so because they believed public scrutiny needed to be brought to the partnership.

In refusing to talk to me after multiple approaches, one partner—an experienced journalist—pointed me to the organization’s public statements on the partnership, citing Facebook’s gag rule. Partners also felt unable to comment on each other. Several mentioned that The Washington Post was originally supposed to be a partnership member, but withdrew for reasons they thought might be significant but would not comment on. (I was unable to speak with staff at The Post.)

Beyond being a methodological challenge for this study, I include discussion of these tensions as a partnership dynamic, because the partnership represents a new kind of journalistic collaboration. News organizations have always had proprietary relationships with other companies—non-disclosure agreements are nothing new—but when the world’s largest social media platform enters into proprietary relationships with major news and fact-checking organizations that shield those relationships from public scrutiny or academic inquiry, it becomes incredibly difficult to learn how the contemporary press functions and to hold these new platform-publisher hybrids accountable.

Beyond this particular partnership, the point stands: if Facebook creates entirely new, immensely powerful, and utterly private fact-checking partnerships with ostensibly public-spirited news organizations, it becomes virtually impossible to know in whose interests and according to which dynamics our public communication systems are operating.

To be clear, I suspect no malicious intent on the part of any partner and fully appreciate the standardized nature of inter-organizational non-disclosure agreements. But since this type of partnership is focused on a novel concern—news organizations’ enrollment in the legitimacy and efficacy of a privately held but publicly powerful communication system—it seems that partners and the publics they acknowledge serving should be governed according to something other than standard corporate non-disclosure agreements.

Leverages and types of power

In tracing the reasons the partnership formed, its priorities, and its tensions around access, several types of inter-organizational leverage and forms of sociotechnical power surfaced.

The most obvious and concrete form of leverage and power was money. At the outset Facebook didn’t grant partners any payment for participating in the network, but starting in approximately June 2017 it offered all partners compensation. In contrast to public reporting claiming that “in exchange for weeding through user flags and pumping out fact checks, Facebook’s partner organizations each receive about $100,000 annually,” several partners said they had refused to accept any payment from the platform. They said that accepting such funding would, to them, breach their independence. They did not want to be perceived as in service of the platform but, rather, in partnership with it, as a way to achieve their public aims.

One partner also expressed reticence about relying on such funding, given its perception that Facebook was continually revising its terms of partnership with news organizations, pointing to previously defunded arrangements made through the company’s Instant Articles program.

Others said they had gladly accepted money from Facebook. Before the funding arrived, one partner said they told the company, “OK, you get what you pay for, we’ll do what we do.” Another said, “Our model is, if we do the work, you need to buy it. Facebook is using it, and benefitting from it, so we should be compensated for it.” These more transactional views of partnership focused on fact-checking as a service that could be commodified.

Others saw Facebook as holding power over their traffic and having a monopoly on the phenomenon they cared most about: accessing and debunking misinformation. They perceived the company’s invitation as almost non-optional. They acknowledged that the platform brought them a significant number of readers (none would give an exact number) and described the partnership as a “way to find things that need to be fact-checked. The Facebook partnership makes it way easier to do that—it was a struggle to see what was being shared. . .best part is that Facebook is doing that work, providing views on what’s popular, what’s circulating.”

Facebook thus provides a kind of organizational service to fact-checkers, acting as a gatekeeper to desperately needed online traffic, sources of misinformation, and metrics of popularity. But, in doing so, Facebook also holds a kind of leverage in the form of irreplaceable power: it becomes a key source of the raw goods that fact-checkers process, but also a potentially mission-limiting partner. One partner said, “Our biggest challenge is figuring out what’s popular,” but worried that the complexity of its fact-checking would be unreasonably narrowed if it focused only on that popularity. “Most of the stories [on the dashboard] are SO wrong, they’re kind of easy.” That same partner later wondered if they were missing out on fighting more complex and powerful forms of misinformation that did not meet Facebook’s threshold for popularity.

Not only has Facebook become a gatekeeper of traffic and a monopolistic source for stories, but it was seen as potentially limiting the complexity and significance of fact-checking that fell outside of Facebook’s prioritizations of misinformation. Indeed, one fact-checking partner wished it could drive fact-checks to Facebook, making Facebook users see what they considered to be were important rebuttals, instead of having to wait for Facebook to drive traffic to the partners.

Partners often described feeling powerless within this cycle. While no partner said that Facebook defined its work, since each could choose which stories from the dashboard they worked on and use their own standards of judgment, several said that within the partnership they were essentially beholden to Facebook’s control over closed computational infrastructure, which surfaced stories and learned from their selections.

Partners had their own kind of leverage and power, though. Through their commitments to the International Fact Checking Network’s statements of principles and their own longstanding brands, these organizations had publicly proven themselves as professionally legitimate, trustworthy, and, to some extent, beyond the charges of interest and bias that are often leveled at Facebook. The platform earns associational capital through affiliations with network-vetted partners, giving it the ability to say that it does not define “fake news”—it leaves that to its partners of good standing.

Also, partners have the ability to self-organize. Although all partners said they rarely coordinated their work with others and had little knowledge of how other members viewed the partnership, at one particularly low moment in the collaboration’s operation several partners banded together to discuss the future of the partnership and articulate a collective response to their perception that Facebook was taking them for granted, using them as public relations cover, and ignoring their requests. While the moment passed and the partnership survived, several partners offered this as evidence that they retained a shared, independent identity that gave them both instrumental power as they collectively reconfigured the terms of the partnership, and symbolic power when they publicly signaled their displeasure with Facebook through trade press articles.

Categories and standards

Interviewees also implicitly and repeatedly described the partnership in terms of standards—standards they attempted to interpret, felt they were beholden to, that were protected within their own organizations, and that they saw as both enabling and problematic.

Two partnership functions appeared in categories and standards. The first set concerns surfacing misinformation, assessing its circulation, and signaling responses to it. Facebook controls almost all of these standards, though news and fact-checking partners play small feedback roles. Specifically, in its desire to determine both the “prevalence and severity” of misinformation, Facebook created and maintains rules for identifying the existence, spread, and harm of misinformation. That is, despite claiming that fact-checkers define fake news, the platform identifies the list of candidate misinformation stories that fact-checkers see on the dashboard. It is conceivable that fact-checkers may reject these categorizations—judging a dashboard item to be true and not needing debunking—but the starting points for fact-checkers are defined by Facebook.

Beyond the categorical judgment of defining content as fake or not, Facebook also signals the prevalence and implied severity of the candidate stories through the “popularity” metric it shows partners through the dashboard. At the time of these interviews, a simple “popularity” column in the dashboard was the primary way that fact-checking partners could sort candidate stories.

Partners voiced a variety of concerns over the dashboard: “It has a nebulous popularity bar, but we don’t know what it signifies”; “Facebook doesn’t say where the [dashboard] list comes from or how it’s made”; “People flag stories at some rate, but I don’t know what the dashboard’s threshold is”; and one partner became suspicious of how the dashboard worked or whether it was changing without their knowledge when they saw the list of stories suddenly grow dramatically for no particular reason. As people often do with computational and algorithmic systems they do not understand but are forced to use, partners created “folk theories” about how the dashboard worked and what it meant.iii They made guesses about how the dashboard worked, why it changed, what its columns signified.

More recently, due to partner feedback, Facebook has added an “impact” metric, though this category remains similarly opaque to most partners. These measures of “potentially fake,” “popularity,” and “impact” are determined through a proprietary combination of user feedback (Facebook users flagging stories as fake) and computational processing (Facebook algorithms judging stories as fake). The choices that fact-checkers make about which dashboard stories to work on, and the fact-checks they upload to the dashboard, are further categorical inputs that then help Facebook (through an undisclosed process) determine which stories should continue to surface on the dashboard and how their popularity should be determined. Debunked stories are then returned to Facebook, with the company then deciding what to do with those stories, if or how to display them to users, and what type of context to give them.

Facebook can thus claim that it does not make final judgments about which content is fake or real—fact-checkers ostensibly are the experts in judging the character of dashboard stories—but through a complex and proprietary set of classifications, it powerfully sets the conditions that surface and characterize misinformation. These classifications are largely inaccessible to partners.iv

In the second set of classifications partners have considerably more control and power. These are the judgments that fact-checkers bring to stories—that represent their individual and organizational standards, and that reflect trends in the field of fact-checking as a whole. Partners largely did not coordinate their work: “I think our dashboard is the same as other organizations but I’m not sure” and “we tried to do a Slack channel [with other partners], but it didn’t really work as people got too busy.”

This local control over standards was both the preference of partners—“we’re not really interested in what competitors are doing”—and aligns with some members’ understanding of the partnership’s aims as a whole. Indeed, Facebook publicly states that multiple-fact checks are required before a story is classified as disputed, and one partner stated that they “like multiple fact-checkers with multiple methods” because the perceived diversity of methods is thought to make the partnership’s collective judgment more defensible.

Across these two types of standards and classifications—those that Facebook uses to populate the dashboard and those that fact-checkers use within their work—there is a kind of division of categorical labor. There is largely no coordination amongst fact-checkers, with the work of aggregating judgments and assessing the stability and usefulness of categories residing mostly within Facebook’s proprietary infrastructure.

Managing scale

The idea of large-scale fact-checking is at the heart of almost all aspects of the partnership. From Facebook’s reliance on a mix of mass-scale user flagging and computational processing, to fact-checkers’ desires to ensure that their work reaches the greatest number of people quickly and in as many forms as possible, partners have a shared desire to control how far and wide misinformation travels.

One of the motivations for designing and deploying the dashboard among partners is Facebook’s desire to move some of the work of mass-scale judgment to news and fact-checking organizations. To do this, Facebook needs to constrain the flow of content it sends to partners, to reduce their workload and take into account the relatively small organizational capacity of many fact-checkers. It is seen as Facebook’s duty to manage the scale of information, a duty that is tightly tied to the partnership’s desire to minimize the impact of large-scale misinformation. The need and responsibility to, as one partner put it, “deal with scale” is inescapable. One fact-checker said, “Facebook knows how to deal with scale, we don’t.”

That said, many partners described struggling with scale. Some are confused by how the dashboard represents the large number of potentially false stories. During one interview, a partner reported that there were currently “2,200- to 2,300 stories in the queue,” a number roughly confirmed during a follow-up interview, but they estimated that “about seventy five percent of them seem to be duplicates.”

Partners often commented that it was hard to know the true scale of the candidate stories Facebook had identified—or the true scale of the misinformation problem—because, although the dashboard seemed to have a very large number of stories, the URLs often looked similar and it was unclear which stories were circulating uniquely. One partner said that between December 2016 and December 2017 they completed about ninety stories through the partnership. They had also submitted over 500 URLs as misinformation, because several dashboard entries are identical and can be debunked with the same fact-check.

One partner said they were able to process four to five stories per day from the dashboard, while another said they submitted about two to three fact-checks per day. Another reported they usually avoid the problem of scale altogether because many of the dashboard stories are about celebrities, and their focus is on political fact-checking. One way partners limit their work amid large-scale misinformation is by narrowly focusing their work on fact-checking that aligns with their organizational missions. This suggests a subtle division of labor that leaves news organizations with considerable power. While Facebook may have the power to set scale sizes by defining categories and dashboard thresholds, fact-checking organizations may practically ignore much of this surfeit by declaring it as outside their purview.

Finally, one partner aptly summarized the ambivalence many members felt about the seemingly large scale of misinformation by simply saying, “I can’t entirely answer the question [about scale]. I’d need to identify how many stories could be done versus how many we’re doing. I don’t know what the entire universe is, we just do what we’re able to do. My sense is we could do more if we had more resources. . .I don’t know what the full scope is.” As with other partnership dynamics, members often defaulted to simply focusing on their narrow, well-understood domains, remaining unbothered by the scale of misinformation because they had little sense of how big the problem was, how much of it was captured by the Facebook dashboard, and what impact their work had on the overarching phenomenon.

Timing

With one exception, all partners stressed their desire to get ahead of misinformation by debunking stories before they could travel far and wide. Before the Facebook network, one partner said, their work was mostly prompted by readers’ questions. But, “by the time we answered the question, it was usually days after the [misinformation] had gone viral. We would wait until something had critical mass before we wrote about it, which felt too late.”

This need to anticipate virality was widely shared across the partnership, with one member describing it not only as an important part of reducing misinformation’s impact, but also as a way of reducing fact-checkers’ workload and the number of stories in the dashboard.

As with other dimensions of the infrastructure, partners had also formed folk theories about its timeliness, or just seemed resigned to not knowing how the system worked: “We don’t know how time works on it, but it seems pretty quick—an event will happen and then we see it. Probably a lag of twenty-four hours. We look at it and you can’t really tell; we’re told it’s updated every day.” All partners similarly expressed uncertainty about Facebook’s understanding of speed, but none said they compressed their own work to keep up with what the dashboard was feeding them:

When we pick something to write about it, it takes a day or two to write the fact-check. I’m not sure how Facebook’s speed works but we have about a 24–36 hour turnaround. What we’re doing is the same thing we do with everything we do on our website – there’s a rigorous review process for everything. . .For any story, there will be 4 people looking at it—we don’t want to get our facts wrong, we don’t want to have to correct it or change ratings.

While no partner said they sped up their process or lowered standards, several said that the dashboard process needed to be faster and more efficient: “We need to get the time horizon down—if it takes 1,000 people to flag something as fake, that takes a long time, then it goes to the list and I don’t get to it. . .this can all take a looooooong time. If too much time passes between story, debunk becomes less efficient.”

One partner suggested that artificial intelligence (AI) would be the real “game-changer” for how time worked, and expressed a hope that fact-checkers would be able to help design these time-keeping AIs.

This was a common sentiment with fact-checkers expressing uncertainty about how Facebook’s categorizations worked, and skepticism that its thresholds meaningfully aligned with the sorts of timescales that they understood fact-checking to require. Indeed, one fact-checker cautioned against placing too much emphasis on speed and anticipating virality, saying, “It’s never ‘too late’ because fake news stays around for a long time. . .These stories have long, long, long tails. It doesn’t matter if it takes fact-checkers a few days to do their work [because] whenever there’s an opportunity for these stories to reappear, they will.”

As with other dimensions of the partnership, time dynamics play out in multiple ways: in partners’ folk theories about when fact-checking should happen; in technological infrastructures that encode rhythms, define newness, and set thresholds; and in people’s aspirations about what they think fact-checking could be, if future technological infrastructures were designed to align with what one participant called “fact-time.”

Automation and ‘practice capture’

While no partner expressed grave concern or hope for automated fact-checking, several described dynamics that suggest a kind of proto-automation at work, and a phenomenon I call “practice capture.”

As described, to populate the dashboard of potentially misinforming stories, Facebook uses a combination of user feedback (flagging) and computational processing (algorithmic guesses about the identify and circulation of misinformation). The decisions that fact-checkers make about how to interact with that dashboard—which stories to pick or ignore, which categories of content to prioritize, which types of stories seem similar, which types of misinformation are judged egregious, and what types of rebuttals to make—all feed into a system that Facebook uses to further populate the dashboard.

Described as a way to improve the quality of the dashboard and the efficiency of fact-checkers, such a feedback loop is both critical to iteratively refining an “example-driven” design process (in which humans guide machines to learn which examples are salient and canonical and which can be safely ignored), this process is also a way of modeling and operationalizing fact-checkers’ practices. I call this “practice capture.”

While it is unlikely that these relatively subtle judgements can be completely automated any time soon, it is more likely, as one partner put it, that Facebook will gradually shift to focusing fact-checkers on better understanding “edge cases” (particularly difficult judgments) and the emerging practices of bad actors who will continue to innovate misinformation techniques. Thus, fact-checkers will not disappear from these systems and human judgment will not be displaced by algorithms, but there is a very real potential that Facebook—through a dashboard-style feedback loop—may gradually narrow the space within which its human partners work.

It is not inconceivable that the dashboard will gradually become populated with fewer stories that the machine learning system fails to understand; instead a greater number of complex cases may appear (the kind of complexity one partner described longing for in the dashboard). This may be described as efficiency and a better use of human judgment, but partners should be aware of how their practices are being modeled, which parts of their judgments may or may not be represented in these operationalizations, which aspects of their habits and rituals may become subtly and obliquely standardized within a machine learning algorithm, which parts of their expertise become fetishized and valued and which parts are captured by proprietary systems, and how it should approach the education of its future professionals if critical (but seemingly “simple”) parts of its practice are black-boxed and made mundane.

While some partners seemed to appreciate this potential future, others were skeptical, saying, “I don’t know how you’d be able to replace humans where you need to do the review” of the misinformation, and write a fact-check that not only rebuts misinformation but that also addresses the “implications and ramifications” of false claims. This focus on contexts and consequences of misinformation requires a level of judgment that this same partner said was beyond machines:

We also use [fact-checks as] an opportunity to explain how things work. If there’s some bogus claim, we explain what the process would be if that we true. For example, for putting someone on the dollar bill—we don’t just say Obama isn’t going on the bill [rebutting a false claim], we say how someone would get on a bill.

Automation and practice capture were not core concerns of the partnership, but a close reading of its infrastructure and its different aspirations creates an image of what kind of automation may be on the horizon, the kind that some partners aspire to and others fear.

Impact

Concerns about transparency, access, and leverage was often closely related to questions of impact. Most simply, it was often hard for partners to tell what effect, if any, their work and the partnership were having. Additionally, across the partnership there were different concerns about what impact partners ideally should have—what should happen to misinformation they identify.

Partners framed many of these concerns as a worry over Facebook’s dominance of partnership knowledge. In response to my question about what impact they thought the partnership was having, one partner said only, “I just can’t answer that question without data that only Facebook has.” Citing a leaked email in which Facebook claimed that a “news story that’s been labeled false by Facebook’s third-party fact-checking partners sees its future impressions on the platform drop by 80 percent,”94 several partners expressed skepticism about this number, saying, “I don’t know how that number is calculated” and “we have no public proof of that” and “I can’t fact-check that claim, and that’s a problem.”

One partner said that after they fact-checked a dashboard story and uploaded their report to Facebook, “We don’t get any information about these stories or what’s happened to them. What they’re telling the public is no more than what we’re getting. We don’t know and we don’t understand how they’re using it.” Several partners expressed a vague sense of embarrassment over their ignorance about impact, with one describing the entire partnership as an “ongoing experiment we don’t really know much about.”

Combined with the leaked Facebook email, these consistent statements of ignorance suggest that the partnership shares no single set of clearly stated impact measurements. Facebook may have reason to believe its eighty percent number, and reason to be happy with this as evidence of the partnership’s success, but such confidence and contentment are not shared by other partners who have far less insight into the infrastructure’s inner workings.

Indeed, Facebook’s definitions of success entail three broad logics: nothing Facebook does should harm or fail to abide by its community standards; some content is probably consistent with community standards but should not circulate on the platform regardless (a “complicated gray area”); and when exposure to harmful content does happen, the harm should be minimized.

From Facebook’s perspective, the partnership is successful if it reduces exposure to misinformation, helps to remove content that violates community standards, and provides context to information that does get through. To the extent that partners work within these measures of success and have little recourse of their own to find other evidence of success or failure in the partnership, they implicitly agree to Facebook’s metrics and are enrolled in the service of its definition of success.

A few partners found other measures of success that they could determine for themselves. PolitiFact publicly stated that the sheer scale of the partnership’s accomplishments and its own organizational reach was one measure of success: “Sharockman said PolitiFact has used it to check about 2,000 URLs since the partnership began—which is a lot, considering the outlet has published about 15,000 fact checks in its entire 10-year history. ‘To cover that much ground in one year with this Facebook tool is a sign of success.’”95

Another partner similarly stated that they had increased their workload to some positive effect and were receiving funding to support it: “We’re doing more now than we have been able to do, which is good. It seems to be effective enough—seems to be some level of effectiveness here.” Others offered the continued existence of the partnership itself as evidence of success, saying that the ability of fact-checking organizations to come together and continue in partnership with Facebook indicated that the partnership was valuable.

Even without considering Facebook’s role, several partners described being proud that the partnership showed how fact-checking as a field was mature enough to sustain collaboration—that it has “raised the profile of fact-checking [and is] helping to reach new people and educate in new ways.”

A final dimension of impact concerns not whether the partnership itself is successful, but what partners think should happen to misinformation identified through the partnership. Very much echoing a marketplace model of speech in which the answer to bad speech is more good speech, all partners agreed that misinformation should be removed from Facebook’s main newsfeed but that it was acceptable—and even desirable—for such misinformation to continue to exist on web pages that the platform did not surface.

As evidence of this uniformity of diagnosis but disagreement over a remedy, all partners cited America’s Last Line Of Defense as an example of a website that consistently spreads mis- and disinformation. And while some partners thought Facebook should ban the site from its platform, others thought it should simply be moved into a secondary area, or that it was sufficient to attach fact-checks to stories that circulated on the website.

Partners largely defined “success” not as the eradication of existing misinformation, but the widespread propagation of fact-checks that would, ultimately, prevent misinformation from having political power. One partner thought Facebook should “put fake news in a ghetto—they’ve got to go, they can’t be intermixed with the newsfeed. Put them somewhere where it’s clear that they’re not real. This means Facebook admitting they’re a media company, not a tech company.”

Others agreed but cautioned that “Facebook’s a private company [with] the ability to set rules and policies and take action they want to” and that they did not think websites should be banned from Facebook because someone’s “motivation for sharing might be complicated. . .and [we] don’t want to police the internet.” Still others echoed this perspective, saying that it’s sufficient that “our fact-check is attached [to a disputed story on Facebook] so people know to think about it as they share. . .As a First Amendment supporter I find that cool.”