Sign up for The Media Today, CJR’s daily newsletter.

Editor’s note: This is the first piece in a series about monitoring political communications during the 2019 Indian elections on WhatsApp, a closed messaging network that’s difficult for researchers and journalists to enter.

More and more, political advertising is being distributed on closed networks, such as WhatsApp and Messenger (both owned by Facebook), Signal, and Telegram. In many places, people like closed networks—they protect users’ privacy, they offer a sense of intimacy. But during elections, closed networks provide a way for political campaigns and activists to avoid scrutiny from regulators and reporters; extracting ads, memes, and other material from inside closed groups is intended to be difficult. That’s why our research team set out to establish routes through which journalists can collect and analyze what large-scale political campaigns are saying on WhatsApp.

The 2019 Indian general election was called the“first WhatsApp election” in India. It wasn’t, however, the first in the world. Over the past twelve months, referendums in Nigeria and Brazil have been referred to as “WhatsApp elections,” with postmortem analysis suggesting that the app is rife with manipulative political messages, including disinformation.

At the Tow Center for Digital Journalism, we used the Indian election cycle, which ran between April 11 and May 19, 2019, as a case study for assessing both general political discourse and information manipulation on WhatsApp. With no APIs, tools, or best practices in place to help outsiders tap into ongoing activity inside closed groups, we devised a strategy to monitor a subset of the political conversation, over a period of three and a half months. The study’s resulting data set—which grew to over a terabyte in size—contains 1.09 million messages, retrieved by joining 1,400 chat groups related to politics in the country.

The landscape

In the aftermath of the Cambridge Analytica scandal, it has become harder to gain insight into what’s happening on Facebook and Twitter. Claiming to be motivated by user privacy concerns, social media companies have incorporated anti-scraping practices, increased restrictions on data accessibility through their publicly available interfaces (called application programming interfaces or APIs), and rolled out ad-tracking tools that are bug-prone. In combination, these changes make identifying unsavory activities—such as “coordinated inauthentic behavior,” acts of violence, and misinformation—more difficult than ever before. There are tools that can help, including CrowdTangle, the Facebook Graph API, and the Twitter API, but WhatsApp is meant to be a black box.

In India, more than 400 million of the 460 million people online are on WhatsApp. Usage of the platform has become so ubiquitous in the country that many people consider its group chats (often themed by interest and maxed out at 256 participants, a cap enforced by the application) one of their primary sources of information. Given how WhatsApp was used during the elections in Brazil and Nigeria, many predicted it would become a key battleground between warring political parties in India in the lead-up to the 2019 general election. Indeed, campaigns developed extensive plans aiming to use WhatsApp to get their messaging into every household.

There are seven national political parties in India. The main contenders for prime minister belong to two of them: Narendra Modi, who was the incumbent, is of the Bharatiya Janata Party (BJP), and Rahul Gandhi, the leader of the opposition, is of the Indian National Congress.

Both parties invested heavily in social media campaigns in 2019. A report in the Hindustan Times suggested that the BJP planned to have three WhatsApp groups for every one of India’s 927,533 polling stations. Meanwhile, Congress’s social media budget was reported to have increased tenfold since the previous election, in 2014, which BJP won by a landslide. In 2019, BJP capitalized further on its lead over Congress, winning 303 seats compared to 2014’s 282. Modi was reelected as prime minister.

The advantages of using a platform like WhatsApp for campaigning are clear: not only does it allow strategists to tailor messages to various interest groups (similar to what Facebook allows with its ads), but it also offers senders anonymity. Since WhatsApp only identifies users by their phone numbers, it’s easy to misrepresent a sender’s identity to others, especially in large groups. WhatsApp has no way to surveil the content being shared across private groups, nor can group members vet the origin of a message or be sure about motives. Much remains shrouded in obscurity.

The end-to-end encrypted nature coupled with the privacy promise of WhatsApp groups are a double-edged sword. While there are plenty of groups created by legitimate organizations, including journalistic ones, there are innumerable others that commit crimes and take advantage of the lack of oversight. (In some cases, which have involved violence, child sex, or rape, Facebook and law enforcement have intervened.)

Deception is easy. There are no preventive measures in place to ensure that nefarious actors don’t create a group called “The Official BJP Group.” Nor is there a way for users to know whether it is official or not. Until April, users could be added to groups, unsolicited and without their consent, putting them in view of misinformation, campaign messaging, and propaganda. The consequences of that can be severely dangerous: in India, we’ve already seen false rumors lead to a spate of mob lynchings and successful anti-vaccination campaigns.

What we did

Given the landscape, trying to tap into and analyze political conversations happening in India on WhatsApp was going to be hard. We knew from the start that the size of the country’s population, the myriad languages spoken, and the inherently “private” aspect of the application all but guaranteed that, at best, we would only get a handle on a small fraction of the conversation. Our initial recourse was to join as many relevant groups as we could find.

There are two ways in which one can become part of a group on WhatsApp: get added to a group by an administrator or join via an invite link. We started by crawling the open web for public invite links, an approach adopted by researchers who have conducted similar research in Brazil. We augmented that by looking for invite links shared on Twitter, Facebook, and WhatsApp groups of which we were already a part. Activists, party affiliates, and campaign workers publish the details of these open groups, encouraging their audiences to join and see how they can help their party.

Simply searching for “WhatsApp invite links” or “WhatsApp groups” on a search engine led us to websites whose sole purpose was to aggregate WhatsApp invite links. Instead of blindly joining every single group, we joined only those relevant to us, (groups whose names indicated the group was politically charged). Those included: “Mission 2019,” “Modi: The Game Changer,” “Youth Congress,” and “INDIAN NATIONAL CONGRESS.”

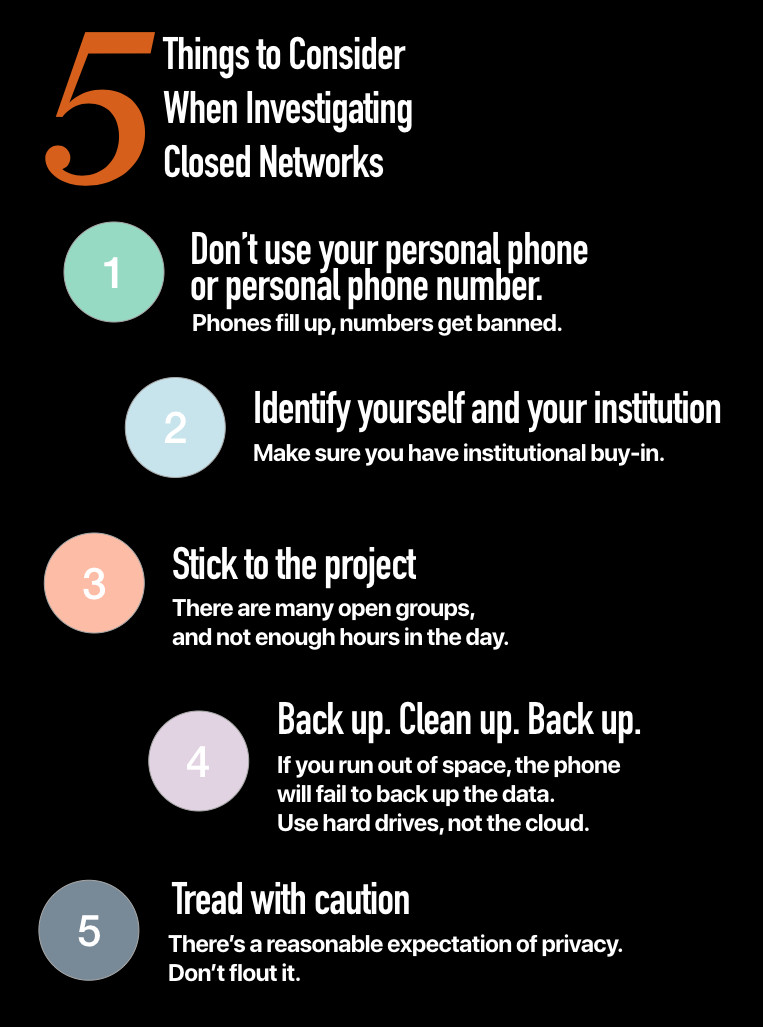

We started this process with a single iPhone and a recently acquired US phone number. To be fully transparent, we identified ourselves as “Tow Center” with the description “a research group based out of Columbia University in New York.” We did not engage in any conversation. To respect WhatsApp’s fundamentally secure design, we joined groups with at least sixty participants. We focused on those with messages in Hindi or English. (We needed to be able to understand the content if we were to analyze it, and our lead researcher speaks both languages.) If the number of participants was fewer than sixty but the group name was clearly political, the invite link was added to a “Tentative” list that we maintained. If and when the number of members hit sixty, we joined the group. We reviewed our lists almost daily.

The obstacles we faced

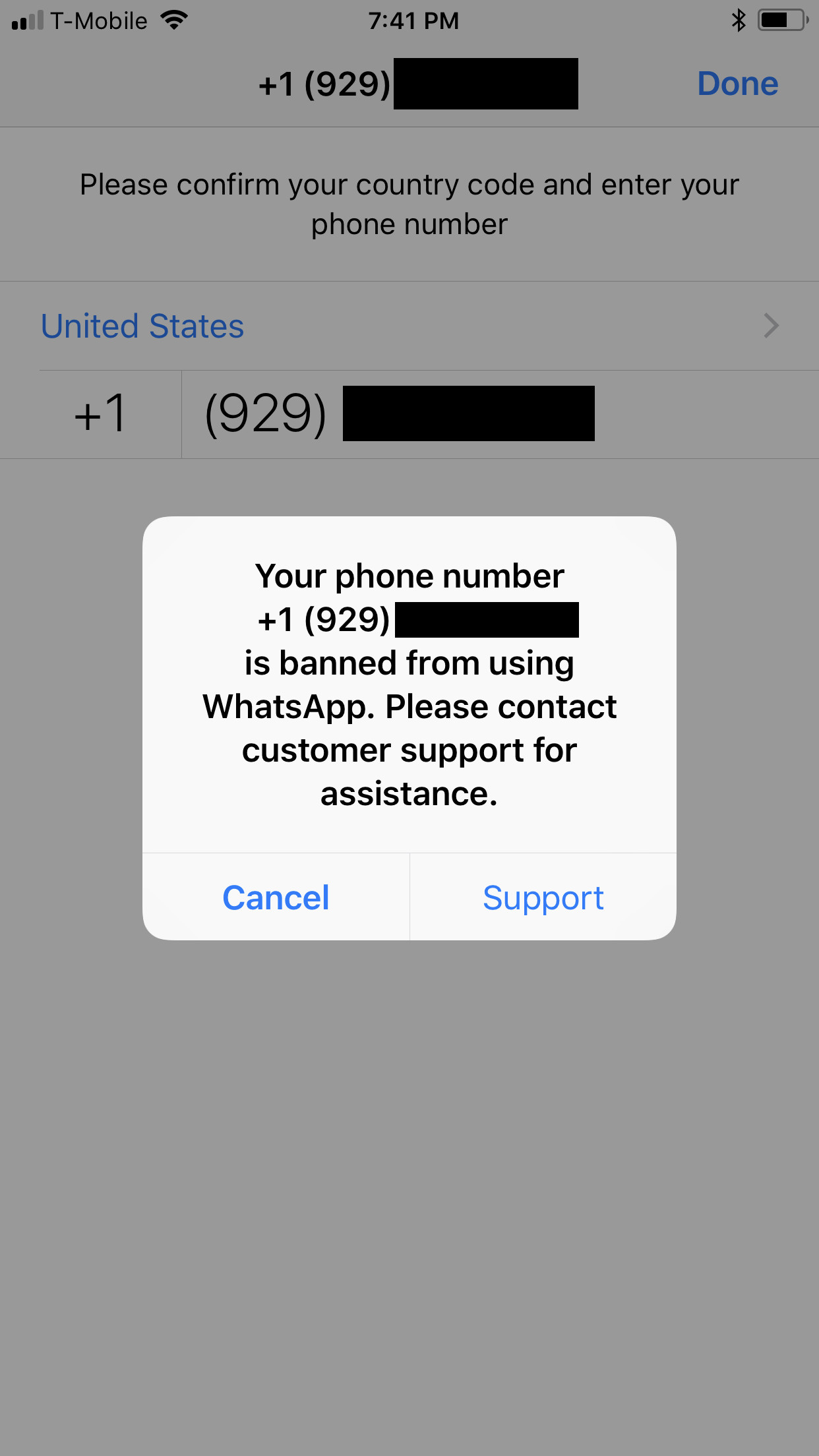

During the first few days, we began tracking groups; about fifty removed us. Then, without any warning, our phone number was banned by WhatsApp. We remain unsure if this was because we were joining too many groups (we were in about six hundred groups at the time we were banned) or because too many administrators had reported our foreign number as suspicious.

So we started again. But this time we activated multiple phones with fresh phone numbers (six in all), and kept the number of groups on each device below three hundred.

We were banned twice more, once after joining only twenty groups on one of our phones. A plausible theory is that Facebook had flagged us as bots after we attempted to automate the process of joining groups via WhatsApp Desktop, a desktop extension to WhatsApp, where it is possible to automate certain actions.

Over the course of our study, we were also removed from groups by an administrator around two hundred times. When this happened, we did not attempt to rejoin the group under a different guise. In many cases, the last message we were privy to prior to being removed suggested one of the predominant reasons was because our phone number was from “another country,” i.e., it didn’t have a “+91” country code. It was not uncommon for group members to call attention to the presence of foreign phone numbers and ask that administrators have them removed.

Ultimately, in three and a half months of data collection between March 1, 2019 and June 16, 2019, we joined about 1,400 groups. Of these, we were removed from about two hundred, and left two hundred forty that turned out to be irrelevant—marketing spam, pornography, job boards. By the time India’s elections came around, we were part of just under a thousand groups, eighty percent of which we joined before the first phase of the election period started on April 11, 2019. We joined the first group on March 1, 2019, and the last group on May 17, 2019. We stopped collecting data on June 16, 2019, four weeks after voting ended.

We kept close tabs on each phone’s storage usage and backed them up when they began approaching their limits. After backup, we were able to go into the WhatsApp Settings and select “Clear All Chats.” We used a piece of commercial software, iPhone Backup Extractor, to pull out the WhatsApp database and media from the iPhone backup files.

By the end of the data collection process, we had amassed over a terabyte (1,000 gigabytes) of data comprised of:

- 500,000 text-only messages

- 300,000 images

- 144,000 links

- 118,000 videos

- 12,000 audio files

- 4,000 PDFs

- 500 contact cards

Our data set was extensive. Still, we must note that India has a population of 1.38 billion people who collectively speak more than 400 indigenous languages. Our analysis is based solely on the groups we were part of, and makes no claim to being representative of the political discourse across the country.

Data analysis

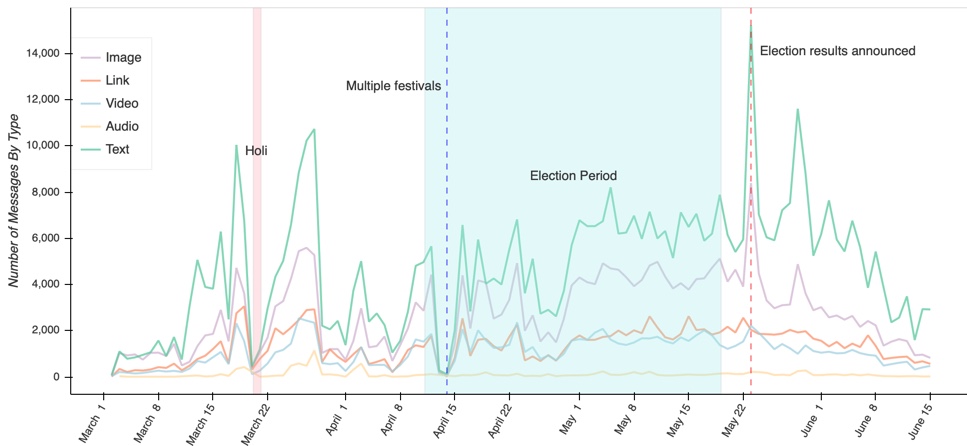

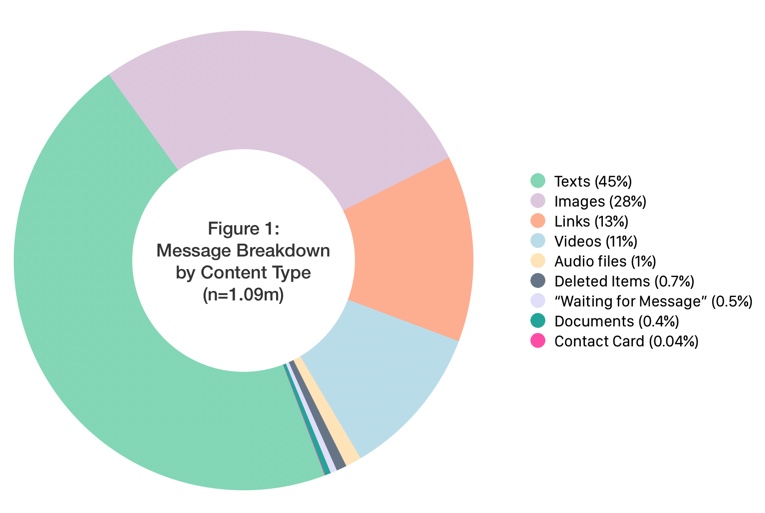

We collected a total of 1.09 million messages (including text, images, links, etc) during our research study. Some groups were considerably more active than others. In fact, more than three quarters of the messages in our data set were drawn from the two hundred fifty most prolific groups. And, though text messages made up forty-five percent of all messages, a majority of the items tracked (fifty-two percent) were images, videos, and links, as can be seen in Figure 1.

WhatsApp is generally considered a distribution platform, meaning that users often repurpose media (images and videos) they didn’t create and don’t own when sharing them in groups. Given how increasingly affordable, and therefore ubiquitous, mobile bandwidth is in India, people tend to embrace colorful visuals over bland text. Visual messages tend to be more memorable, get more attention, and, as some studies have shown, inspire more trust among recipients. Research also indicates that they are shared more widely. For anyone trying to influence political dialogue, using images and videos seems an obvious strategy; it comes as no surprise that the Oxford Internet Institute found that misinformation on WhatsApp primarily takes the form of visual content.

The peaks and troughs of message frequency

Figure 2 shows the combined daily activity of WhatsApp for texts, images, links, videos, and audio messages we collected over our three-and-a-half month data collection period. Figure 3 breaks this down further by message type—text, images, videos, links, and audio messages. Messages ranged from near zero in a single day to almost thirty thousand, with peaks and troughs influenced by daily news cycles, national holidays, and weekends. Notably, the most activity did not occur during the election cycle or in the run-up to it, but on May 23, 2019, the day the election results were announced.

Overall, the days with highest volumes of messages followed major news events in the country. On March 17, for instance, Prime Minister Narendra Modi changed his Twitter name to “Chowkidar Narendra Modi”; prompted by an oppositional campaign slogan, “Chowkidar chor hai” (“the watchman is a thief”), Modi co-opted the word “chowkidar” for himself and his supporters, creating the slogan “Main bhi Chowkidar” (“I am also a watchman”). That kicked off a wave of activity on social media, with members and supporters of BJP, adding “Chowkidar” to their Twitter handles or hashtagging #MainBhiChowkidar.

Ten days later, message activity peaked again. Although not specifically election-related, the success of “Mission Shakti” (“Mission Strength”), an anti-satellite missile that shot down a satellite in low-earth orbit, was a milestone for India’s space program, putting it on a tier with Russia, the US, and China.

The two troughs—when the number of messages approached zero—on March 20 to 21 and on April 14, are noteworthy, too. A plausible explanation for the negligible activity is that there were national festivals on both dates: first, Holi, the Indian festival of color, and then various holidays celebrated in different states—including New Year’s Day, a spring harvest festival—all took place on April 14. During Holi, many of the videos that were shared showed people celebrating; on April 14, too, greetings and felicitations took up most of the conversation.

More than a third of the posts were forwards—and that’s troubling

As previously noted, content on WhatsApp is often co-opted from other social media networks. This means that lack of attribution (who originally created a video) and provenance (where the video first appeared) tend to enable the spread of misinformation. It’s deceiving: the intimate nature of WhatsApp presents messages as if they’re coming from a trusted source. Until mid-2018, recipients of forwarded messages were given no indication that what they were seeing had come from another chat group.

Recently, parent-company Facebook has added some constraints to how messages are forwarded on WhatsApp. For one, users can only forward a message to five people or groups at once. Facebook has also added a “Forward” notation where applicable. But this doesn’t account for content that’s been copied and pasted from elsewhere, Two months ago, Facebook also introduced a new notation, “Frequently Forwarded,” in an effort to flag virality. That latest update came after the Indian elections, meaning that it didn’t help our research, or the users in India preparing to vote.

Our analysis shows that thirty-five percent of the media items in our dataset had been forwarded. In a handful of instances, users had forwarded the same message to ten or more groups at a given time (presumably by hitting “Forward” on the original message multiple times). A recent study found that WhatsApp’s change to restrict users’ ability to forward messages helped slow the spread of disinformation. (As we don’t have data from before the change on Facebook’s side, we can’t say whether this represents a significant improvement.)

Most-shared items

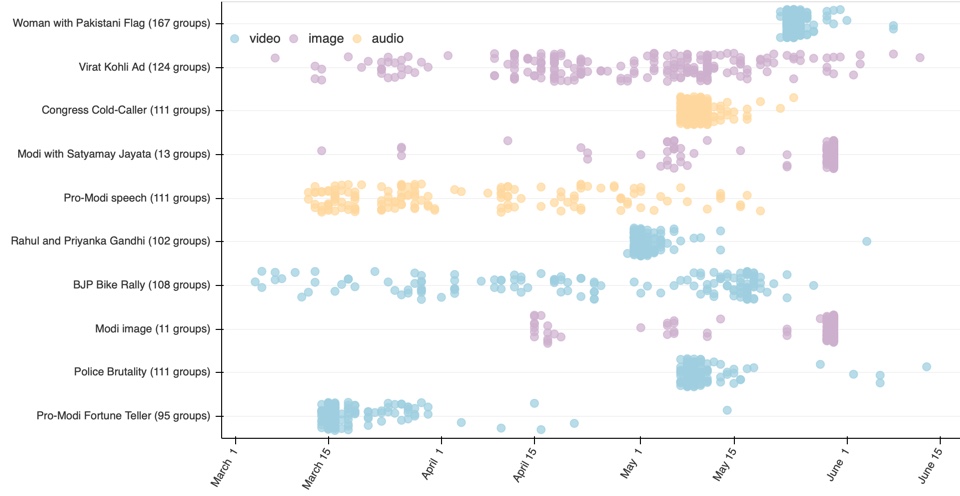

As part of our analysis, we identified the ten media items shared most widely across our dataset. Notably, the top ten items had no overlap with the most-forwarded items. As illustrated in Figure 4, the most shared items—five videos, three images, and two audio files—typically followed one of two trajectories: some received a large number of shares in short, hyperactive bursts before petering out, whereas others had a longer tail and were shared steadily across the full election period.

The items that made their way onto our top ten list were, for the most part, sent to multiple groups more than once, not necessarily at the same time. A few of them were innocuous, but others were inflammatory.

Perhaps that is unsurprising. After all, what the New York Times has called “outrage porn”—material “selected specifically to pander to our impulses to judge and punish and get us all riled up with righteous indignation”—is omnipresent within social networks. No matter the topic at hand, anger and disgust go viral.

From our dataset, a video called “Police Brutality” was intended to stoke outrage about India’s policing, and prompted 141 shares across 111 of our groups, mostly in the second week of May. The video was accompanied by a message encouraging all group members to share it. When news organizations looked into the footage, authorities told them that it was two years old.

The video in our dataset that was shared the most widely showed a woman sitting in front of a Pakistani flag, desecrating the Indian flag. She can be heard arguing that India should be torn to pieces. Similar to the police brutality video, this one was shared extensively in a short burst of time before the shares petered out: 268 times across 167 groups during the course of a week.

The “BJP Bike Rally” video, in contrast, had a much longer shelf life. This video, which appeared in our data set about 150 times, shows police-initiated violence breaking out during a rally in Calcutta. Accompanied in places by text that reads, “Watch this horrible video to understand what condition West Bengal is in and how BJP workers are facing extreme atrocities,” it seems likely that those sharing it considered it an effective way to help get out the vote. Indeed, sharing of the video dropped off almost completely after the election period.

The one exception to the inflammatory videos is a three-second clip sans audio of the leader of the opposition, Rahul Gandhi, with his sister Priyanka Gandhi. This was likely shared again and again by Gandhi’s supporters in an effort to promote good will on his behalf.

Both audio files reflect anti-Congress/pro-BJP sentiment, but with completely different messages. The “Congress Cold-Caller” is a recording of a phone conversation; the caller tries to solicit a vote for Meenakshi Natarajan, a candidate for Congress, and the person at the other end of the line eviscerates her. The “Pro-Modi speech” is a monologue on how his rivals and opponents are all, unlike him, corrupt. Versions of the speech also appeared, without attribution, in comments on other websites, including Facebook and YouTube.

The second most-shared item—an advertisement for a mobile e-sports platform with Virat Kohli, the Indian cricket captain, as its brand ambassador—did the rounds throughout the time we were collecting data. A total of 138 accounts shared the advertisement; we saw it 219 times. The other two most-shared images were simply headshots of Modi.

Cross-network links

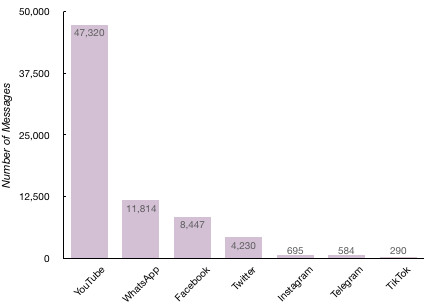

Of the 144,000 links messages in our dataset, more than half directed users to other social networks. Individual links to India-only networks—Sharechat and Helo—represented a tiny share (213 total); when we count links accompanied by media (images or videos), the number of these jumped up (to a total of 2,700 shares), which is still relatively modest. As can be seen in Figure 5, YouTube received the overwhelming majority of links at sixty-five percent.This is a trend we will be exploring in detail in a later post.

The links to WhatsApp and Telegram, another closed social network, were predominantly invitations to join other groups. In a handful of cases, links directed users to the WhatsApp Business API.

Links to news organizations are strikingly few. The Hindi version of NDTV, an Indian television media company, received the most links—just over 700. None of India’s other national news outlets had more than 300 links.

Conclusion

In March, Facebook announced its intent to make all messaging across its properties (Facebook, Instagram, and WhatsApp) secure. By ensuring that all conversations are end-to-end encrypted, Facebook is hoping to restore confidence in its trustworthiness and, it claims, promote “meaningful” interactions.

The importance of encrypting people’s personal data cannot be overstated. Giant companies like Facebook have an essential role to play. Facebook’s announcement triggered regulatory alarm, prompting governments of the United States, United Kingdom, and Australia to request that Facebook hold off on implementing its plans. In a letter, they suggested that encryption makes it harder to crack down on illegal activity.

The conversation around end-to-end encryption is far bigger than Facebook. But, the scale of Facebook makes it hard to exclude them from this conversation. As the information ecosystem heads further toward closed networks where it’s easier to micro-target groups of people for nefarious political purposes, it becomes increasingly important to understand how closed environments operate and the best practices for examining them.

To that end, further installments in this series will continue to use the Indian elections as a jumping-off point to explore the ethical conundrums, and practical considerations that newsrooms and academics should consider while investigating closed networks.

Tow Center research fellow Ishaan Jhaveri contributed to this project.

Update: A previous version of this article misstated the number of links to India’s national news outlets found in the study’s data.

Has America ever needed a media defender more than now? Help us by joining CJR today.